When building your own operating system, the moment when you first write data to a real physical hard disk of a real PC is nothing less than thrilling – after all, making a mistake at this point could mean that you happily overwrite data on your hard drive randomly and wipe out important data on your test machine.

So working with real hard drives is nothing for the faint of heart – but course, no operating system would be complete without that ability. In this post, we look at the basic concepts behind the communication between the operating system and the hard drive.

First, let us see how data on a hard drive is stored. The most basic conceivable hard drive consists of a thin disk, called a platter, on which the data is stored, and a device called head that is able to read and write data. The disk is spinning and the head can be moved forth and back across the disk, so that it can be positioned at an arbitrary distance from the center of the disk.

Physically, the disk is organized in concentric tracks. When the head is positioned at a certain distance from the center of the disk, it can therefore access an individual track. Tracks are further separated into sectors, as shown in the upper part of the diagram below (taken from this Wikipedia page). Finally, a block is the intersection of a sector with a track. So to identify a specific block, you could use the combination of a sector and the track on which the block is located.

This simple design is actually wasting some space, as you could only use one side of the disk. If you want to store data on both sides, you need two heads – one below and one above the disk. You could even increase the capacity further by building a stack of platters, which heads being located between the platters so that each side of a platter corresponds to one head reading from it and writing to it, as displayed in the lower part of the diagram.

In this setup, the union of tracks locates at corresponding positions on all platters is called a cylinder. If you know the cylinder and the head, you know the track. You could therefore specify the location of a block on the disk by the combination of a cylinder, a head and a sector. This addressing method is therefore called the CHS addressing and was the first addressing mode used on early IBM PCs and compatible machines.

Later, the actual geometry of the hard drive became more complicated, and addressing schemes that decouple the actual geometry of the hard drive from the address were introduced, most notably the so-called logical block addressing (LBA). In that addressing mode, which is the current standard, a hard disk is thought of as a sequence of blocks, numbered starting with zero. To access a block, you simply specify the number of the block. The hard disk controller, i.e the circuitry that is actually operating the hard drive and sitting between the CPU and the actual hard drive, will convert this LBA number into the actual physical location of the data on the drive.

So how do we actually access a block? The easiest way to do this is usually called programmed input output (PIO). To explain this, we first need to recall that a CPU is not only accessing the system memory, but can also talk to devices using designated channels, so called ports. If the CPU writes to a port, the data will be sent to the device, and vice versa data written by the device can be accessed by the CPU by reading from a port.

So to read from a hard drive, we could proceed as follows. First, we would write a command to a specific port associated with the hard drive. This command would tell the hard drive what to do – for instance to read – and it would contain the LBA number of the block we want to read. Then the hard drive controller would perform the actual read operation, and once complete, would send the data back to the CPU that would then read it from a port.

The format of the commands and the exact interaction between the CPU and the device in this process were defined in a standard called ATA, also called IDE or Parallel ATA (PATA). This specification did not only describe the interaction between the hard disk controller and the CPU, but also the low-level electronics, cables, connectors and so forth.

This method of reading and writing data is simple, but has a major disadvantage – it keeps the CPU busy. In the worst case, the CPU has to write the command, wait in a busy loop and then read the data one word at a time – and if you wanted to read several blocks, you had to do this over and over again. Not surprising that alternatives were developed soon.

The first improvement we can make is to make the operation interrupt driven. In this version, the CPU would send a command and could then go off and do something else while the hard disk controller is working. Once the data has been read, the controller informs the CPU that data is available by raising an interrupt, and the CPU can go ahead and fetch the data. This is already more efficient, but still suffers from the fact that the data has to be read byte for byte.

Consequently, the next step in the development was what is called Direct Memory Access (DMA). With DMA, the hard disk controller can communicate directly with the memory controller and transfer the data read directly into a designated area of the systems main memory without any involvement of the CPU. Only once all the data has been read, an interrupt is raised to raised to inform the CPU that the data has been transferred and can be worked with. With this approach, large blocks of data can be read and written while the CPU is able to work on other threads.

In 2000, a serial version of the ATA standard called Serial ATA (SATA) was introduced. Due to the serial processing, SATA allows much higher transfer rates between the hard drive and the motherboard and therefore a much higher I/O throughput and soon involved into the de-facto standard, also for consumer PCs. A SATA device can still operate in a so-called legacy mode, where it is presented to the CPU as a PATA/IDE device. However, to take advantages of some new features of the SATA protocol, for instance a higher number of devices per controller, a new protocol for the communication between the CPU and the controller needs to be used which is called AHCI. The AHCI protocol is significantly more complicated than the ATA protocol, but in fact both protocols still follow the same logical steps to read and write data.

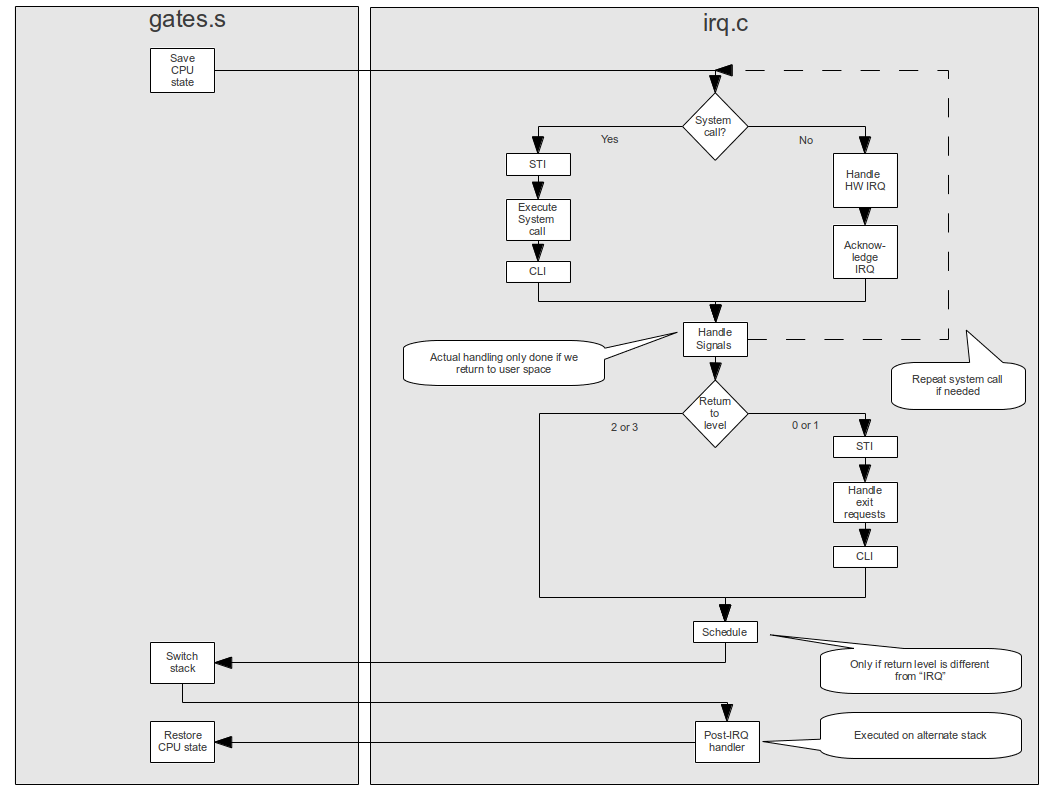

Let us now combine this understanding with what we have learned about interrupts and multitasking to see how the operating system would typically orchestrate a disk drive access, for instance to read data from the disk. The following diagram illustrates the process.

First, the operating system reserves an area of system memory for the DMA transfer. Then, the kernel prepares the command. The format of the command depends on the used protocol (AHCI or legacy IDE), but typically, the command would encompass the location of the data on the disk to be read, the number of blocks to be read and the location of the DMA buffer. Then the command is sent to the hard drive.

At this point in time, the CPU is free to work on something else, so the kernel would typically put the current task to sleep and re-schedule so that a different task can start executing.

While this happens, the hard drive starts to execute the command. It reads the requested data and places it in the DMA buffer prepared by the OS. Then it raises an interrupt to indicate that the data has arrived in main memory.

Once the operating system receives the interrupt, it will stop the currently executing task and switch back to the task that requested the read. This task can now continue execution and work with the data.

Of course, the real picture is more complicated. What the image above calls the “device”, for instance, is in reality a combination of the actual hard disk controller and components of your motherboards chip set, like the AHCI controller or the DMA controller. In addition, several hard drives can operate in parallel, so the kernel needs a way to keep track of the currently executing requests and map incoming interrupts to the requests currently in flight. And, in an SMP environment with more than one CPU, semaphores or other locking mechanism need to be in place to make sure that different requests do not collide. If you are interested in all those details, you might want to take a look at the documentation of the ctOS hard disk driver.

We now understand how a hard drive and an operating system kernel communicate to read or write sectors. However, an application does of course not see individual blocks of a hard disk, instead the hard disk appears as a collection of files and directories. The translation between this logical view and the low-level view is done by the file system at which we will look in the next post in this series.